by Doug Kreitzberg | Jun 22, 2020 | Cybersecurity, Disinformation, Phishing

In 1989 the U.S. Postal Service issued new stamps that featured four different kinds of dinosaurs. While the stamps look innocent enough, their release was the source of controversy among paleontologists, and even serves as an example of how misinformation works by making something false appear to be true.

The controversy revolves around the inclusion of the brontosaurus, which, according to scientists at that time, never existed. In 1874, paleontologist O.C. Marsh discovered the bones of what he thought was a new species of dinosaur. He called it the brontosaurus. However, as more scientists discovered similar fossils, they realized that what Marsh had found was in fact a species previous identified as an apatosaurus, which, ironically, is Greek for “deceptive lizard.” Paleontologists were therefore rightly upset to see the brontosaurus included on a stamp with real dinosaurs.

Over 30 year later, however, these stamps may have something to teach us about how disinformation works today. They show how disinformation is not simply about falsehoods — it’s about how those falsehoods are presented so as to seem true.

The stamps help illustrate this in three ways:

1) Authority

One of the ways something can appear to be true is when the information comes from a figure of authority. Because the stamps were officially released by the U.S. government, it gives the information contained on them the appearance of truth. Of course, no one would think the USPS is an authority on dinosaurs, and yet the very position of authority the postal service occupies seems to serve as a guarantee of the truth of what is presented. The appearance of authority, however wrongly placed it is, is often enough for us to believe something to be true.

This is a tactic used by scammers all the time. It’s the reason why you’ve probably gotten a lot of robocalls claiming to be the IRS. Phishing emails also use this tactic by spoofing the ‘from’ field and using logos of businesses and government agencies. We too often assume that, just because information appears to be coming from an authority, it must be true.

2) Truths and a Lie

Another way something false can appears true is by placing what is fake among things that are actually true. The fact that the other stamps in the collection — the tyrannosaurus, the stegosaurus, and the pteranodon — are real gives the brontosaurus the appearance of truth. By placing one piece of false information alongside recognizably true information, that piece of false information starts to look more and more like a truth.

Fake news on social media uses this tactic all the time. Phishing attacks also take advantage of this by replicating certain aspects of legitimate emails. This might include mentioning information in the news, such as COVID-19, or even including things like an unsubscribe link at the end of the email. This tactic works by using legitimate information and elements in an email to cover up what is fake.

3) Anchoring

The US Postal Service did not invent the brontosaurus: in fact, the American Museum of Natural History named a skeleton brontosaurus in 1905. Once a claim is stated as truth, it becomes very hard to dislodge. This was actually the reasoning the US Postal Service used when they were challenged: “Although now recognized by the scientific community as Apatosaurus, the name Brontosaurus was used for the stamp because it is more familiar to the general population.” Anchoring is a key aspect of disinformation, especially with regards to persistency.

Beyond Appearances

Overall, what the brontosaurus stamp shows us is that our ability to discern the true from the false largely depends on how information is presented to us. Scammers and phishers have understood this for a long time. The first step in critically engaging with information online is therefore to recognize that just because something appears true does not, in fact, make it true. Given the continued rise of disinformation, this is a lesson that is more important now than ever. In fact, it is unlikely disinformation will ever become extinct.

by Doug Kreitzberg | Jun 19, 2020 | Cyber Awareness, Human Factor, Phishing

When you want to form a new habit or learn something new, you may think the best way to start is to dedicate as much time and energy as you can to it. If you want a learn new language, for example, you may think that spending a couple of hours every day doing vocab drills will help you learn faster. Well, according to behavioral scientist BJ Fogg, you might be taking the wrong approach. Instead, it’s better to focus on what Fogg calls tiny habits: small, easy to accomplish actions that keep you engaged without overwhelming you.

Sure, if you study Spanish for three hours a day you may learn at a fast rate. The problem, however, is that too often we try to do too much too soon. By setting unrealistic goals or expecting too much from ourselves, new habits can be hard to maintain. Instead, if you only spend five minutes a day, chances are you will be able to sustain and grow the habit over a longer period of time and have a better chance of retaining what you’ve learned.

The Keys To Success

According to Fogg, in order to create lasting behavior change, three elements come together at the same moment need to come together:

- Motivation: You have to want to make a change.

- Ability: The new habit has to be achievable.

- Prompt: There needs to be some notification or reminder that tells you its time to do the behavior.

Creating and sustaining new habits requires all three of these elements to be successful — with any element missing, your new behavior won’t occur. For example, if you want go for a 5 mile run, you’re going to need a lot of motivation to do it. But if you set smaller, easy to achieve goals — like running for 5 minutes — you only need a little motivation to do the new behavior.

The other key factor is to help yourself feel successful. Spending 2 minutes reviewing Spanish tenses may not feel like a big accomplishment, but by celebrating every little win you will reinforce your motivation to continue.

The Future of Cyber Awareness

Tiny habits can not only help people learn a new language or start flossing, it can also play an important role in forming safer, more conscious online practices. Our cyber awareness training program, The PhishMarket™, is designed with these exact principles in mind. The program combines two elements, both based on Fogg’s model:

Phish Simulations: Using phish simulations help expose people to different forms of phish attacks, and motivates them to be more alert when looking at their inbox. While most programs scold or punish users who fall for a phish, The PhishMarket™ instead uses positive reinforcement to encourage users to keep going.

Micro-Lessons: Unlike most training programs that just send you informative videos and infographics, The PhishMarket™ exclusively uses short, interactive lessons that engage users and encourage them to participate and discuss what they’ve learned. By keeping the lessons short, users only need to dedicate a few minutes a day and aren’t inundated with a barrage of information all at once.

Creating smart and safe online habits is vital to our world today. But traditional training techniques are too often boring, inconsistent, and end up feeling like a chore. Instead, we believe the best way to help people make meaningful changes in their online behavior is to focus on the small things. By leveraging Fogg’s tiny habits model, The PhishMarket™ has successfully helped users feel more confident in their ability to spot phish and disinformation.

by Doug Kreitzberg | May 28, 2020 | Cyber Awareness, Phishing, social engineering

With phishing campaigns now the #1 cause of successful breaches, it’s no wonder more and more businesses are investing in phish simulations and cybersecurity awareness programs. These programs are designed to strengthen the biggest vulnerability every business has and that can’t be fixed through technological means: the human factor. One common misconception that may employers have, however, is that these programs should result in a systematic reduction of phish clicks over time. After all, what is the point of investing in phish simulations if your employees aren’t clicking on less phish? Well, a recent report from The National Institute of Standards and Technology actually makes the opposite argument. Phish come in all shapes and sizes; some are easy to catch while others are far more cunning. So, if your awareness program only focuses on phish that are easy to spot or are contextually irrelevant to the business, then a low phish click rate could lead to a false sense of of security, leaving employee’s unprepared for more crafty phishing campaigns. It’s therefore important that phish simulations present a range of difficulty, and that’s where the phish scale come in.

Weighing Your Phish

If phish simulations vary the difficulty of their phish, then employers should expect their phish click rates to vary as well. The problem is that this makes it hard to measure the effectiveness of the training. NIST therefore introduced the phish scale as a way to rate the difficulty of any given phish and weigh that difficulty when reporting the results of phish simulations. The scale focuses on two main factors:

#1 Cues

The first factor included in the phish scale is the number of “cues” contained in a phish. A cue is anything within the email that one can look for to determine if it is real of not. Cues include anything from technical indicators, such as suspicious attachments or an email address that is different from the sender display name, to the type of content the email uses, such as an overly urgent tone or spelling and grammar mistakes. The idea is that the less cues a phish contains, the more difficult it will be to spot.

#2 Premise Alignment

The second factor in the phish scale is also the one that has a stronger influence on the difficulty of a phish. Essentially, premise alignment has to do with how accurately the content of the email aligns with what an employee expects or is used to seeing in their inbox. If a phish containing a fake unpaid invoice is sent to an employee who does data entry, for example, that employee is more likely to spot it than someone in accounting. Alternatively, a phish targeting the education sector is not going to be very successful if it is sent to a marketing firm. In general, the more a phish fits the context of a business and the employee’s role, the harder it will be to detect.

Managing Risk and Preparing for the Future

The importance of the phish scale is more than just helping businesses understand why phish click rates will vary. Instead, understanding how the difficulty of a phish effects factors such as response times and report rates will deepen the reporting of phish simulations, and ultimately give organizations a more accurate view of their phish risk. In turn, this will also influence an organization’s broader security risk profile and strengthen their ability to respond to those risks.

The phish scale can also play an important role in the evolving landscape of social engineering attacks. As email filtering systems become more advanced, phishing attacks may lessen over time. But that will only lead to new forms of social engineering across different platforms. NIST therefore hopes that the work done with the phish scale can also help manage responses to these threats as they emerge.

by Doug Kreitzberg | May 14, 2020 | Cyber Awareness, Phishing, Risk Management

Remember the sales contest from the movie, Glengarry Glen Ross?

“First prize is a Cadillac Eldorado….Third prize is you’re fired.”

We seem to think that, in order to motivate people, we need both a carrot and stick. Reward or punishment. And yet, if we want people to change behaviors on a sustained basis, there’s only one method that works: the carrot.

One core concept I learned while applying behavior-design practices to cyber security awareness programming was that, if you want sustained behavior change (such as reducing phish susceptibility), you need to design behaviors that make people feel positive about themselves.

The importance of positive reinforcement is one of the main components of the model developed by BJ Fogg, the founder and director of Stanford’s Behavior Design Lab. Fogg discovered that behavior happens when three elements – motivation, ability, and a prompt – come together at the same moment. If any element is missing, behavior won’t occur.

I worked in collaboration with one of Fogg’s behavior-design consulting groups to bring these principles to cyber security awareness. We found that, in order to change digital behaviors and enhance a healthy cyber security posture, you need to help people feel successful. And you need the behavior to be easy to do, because you cannot assume the employee’s motivation is high.

Our program is therefore based on positive reinforcement when a user correctly reports a phish and is combined with daily exposure to cyber security awareness concepts through interactive lessons that only take 4 minutes a day.

To learn more about our work, you can read Stanford’s Peace Innovation Lab article about the project.

The upshot is behavior-design concepts like these will not only help drive change for better cyber security awareness; they can drive change for all of your other risk management programs too.

There are many facets to the behavior design process, but if you focus on these two things (BJ Fogg’s Maxims) your risk management program stands to be in a better position to drive the type of change you’re looking for:

1) help people feel good about themselves and their work

2) promote behaviors that they’ll actually want to do

After all, I want you to feel successful, too.

by Doug Kreitzberg | Apr 2, 2020 | Coronavirus, Human Factor, Phishing

Online scammers continue to use the COVID-19 crisis to their advantage. We have already seen phishing campaigns against the healthcare industry. The newest target? Small businesses. This week, the Small Business Administration Office of the Inspector General (SBA OIG) sent out a letter warning of an increase of phishing scams related to the new CARES Act targeting business owners.

CARES Act Loan Scams

The uptick in phishing scams imitating the SBA is primarily linked to the recent stimulus bill the government passed in response to the ongoing COVID-19 crisis. The bill, called the CARES Act, includes $350 billion in loans for small businesses. Given the current crisis, many businesses are eager to apply for loans, opening the door to new forms of phishing scams.

In addition, the scale and unprecedented nature of the loan program allows phishers to capitalize on the confusion surrounding the loan services. Last year, the SBA gave out a total of $28 billion, but now has to create a system to provide roughly 12 times that amount over the course of a few months. In order to help with the process, congress allowed the SBA to expand their list of loan venders. While this may help speed up the process, banks with no prior experience with SBA loan programs will now be distributing funds. Speeding up the loan process will help certainly ease the pain of many small businesses, but it also opens the room for errors, errors that scammers can use for personal gain.

What to Look For

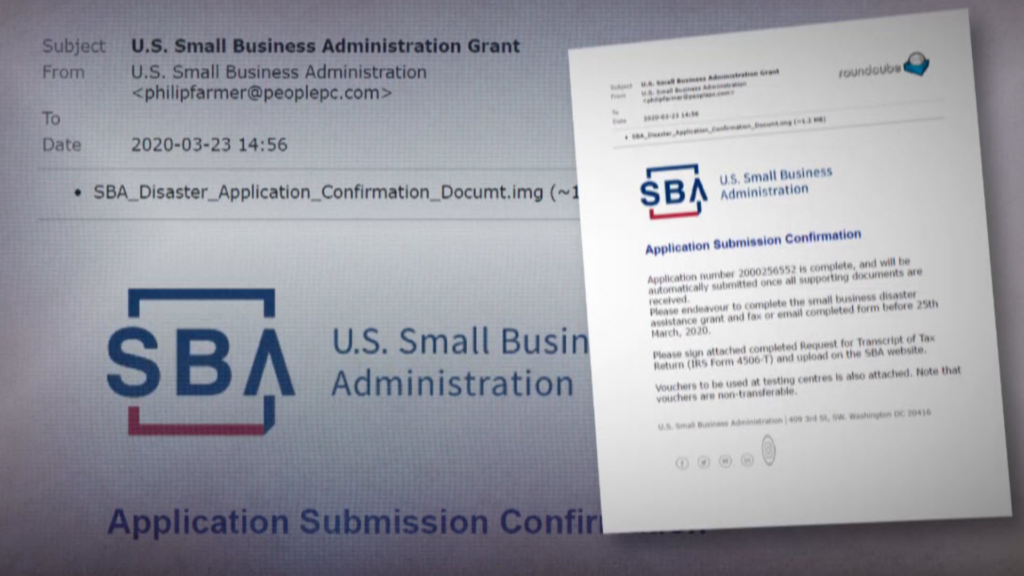

Business owners are already seeing this happen. A small businesses owner recently applied for a loan under the CARES Act to help keep her business running. Shortly after filing her application, her husband received an email stating they would need to fill out and return a tax statement to complete their application.

The email included the SBA logo and looked legitimate. However, on closer inspection, she realized the account number listed in the email did not match the one she received when applying for the loan, and the email address was not from a SBA email account.

Breathe in, Breathe O-U-T

This business owner was savvy enough to not fall for the scam, but others are likely to be tricked into handing over sensitive information or paying money to online scammers. In order to protect people against phishing campaigns, we recommend what we call the Breathe O-U-T Process:

- When you first open an email, first, take a Breath. That’s enough to get started because it acts as a pattern interrupt in automatic thinking and clicking (that leads to people biting the bait).

- Next, Observe the sender. Do you know the sender? Does their email address match who they say they are? Have you communicated with this sender before?

- Then, check Urls and attachments. Hover over the links to see if the URL looks legitimate. Be wary of zip files or strange attachments. If you aren’t sure if a URL is legitimate or not, just go to google and search for the page there instead.

- Finally, take the Time to review the message. Is it relevant? Does it seem too urgent? Does the information match what you already know? How’s the spelling? Be wary of any email which tries too hard to create a sense of urgency. In addition, phish are notoriously known for poor spelling and grammar. While we don’t all write as well as our fourth grade teacher, be careful when you see a lot of “missteaks”.

We’re living through strange and confusing times, and there are people out there who will use that to their advantage. Just taking a few extra minutes to make sure an email is legitimate could help save you a lot of extra time, worry, and money — none of which we can spare these days.

If you want to learn more about phishing scams and how to protect yourself, we are now offering the first month of our cyber awareness course entirely free. Just click here to sign up and get started.

by Doug Kreitzberg | Mar 27, 2020 | Coronavirus, Data Breach, malware, Phishing, ransomware, Uncategorized

Hackers are continuing to use the coronavirus crisis for personal profit. We recently wrote about the increase in malicious sites and phishing campaigns impersonating the World Health Organization and other healthcare companies. But now hackers appear to be turning their sights to the healthcare sector itself. Here are two notable cases from the past few weeks.

WHO Malware Attempt

Earlier this week, the World Health Organization confirmed hackers attempted to steal credentials from their employees. On March 13th a group of hackers launched a malicious site imitating the WHO’s internal email system. Luckily, the attempted attack was caught early and did not succeed in gaining access to the WHO’s systems. However, this is just one of many attempts being made to hack into the WHO. The chief information security officer for the organization Flavio Aggio told Reuters that hacking attempts and impersonations have doubled since the coronavirus outbreak.

Similar attempted hacks against other healthcare organizations are popping up every day. Costin Raiu, head of global research and analysis at Kaspersky, told Reuters that “any information about cures or tests or vaccines relating to coronavirus would be priceless and the priority of any intelligence organization of an affected country.”

Ransomware Attack Against HMR

Unlike the attack on the WHO, a recent ransomware attack was successful in stealing information from a UK-based medical company, Hammersmith Medicines Research (HMR). The company, which performs clinical trials of tests and vaccines, discovered an attack in progress on March 14th. While they were successful of restoring their systems, ransomware group called Maze took responsibility. On March 21st, Maze dumped the medical information of thousands of previous patients and threatened to release more documents unless HMR paid a ransom. HMR has not disclosed how the attack occurred, but have stated that they will not pay the ransom.

Four days after the initial attack, Maze released a statement saying they would not target medical organization during the coronavirus pandemic. Yet, this did not stop them from publicizing the stolen medical information a week later. After the attack gained publicity, Maze changed their tune. The group removed all of the stolen files from their website, but blamed the healthcare industry for their lack of security procedures: “We want to show that the system is unreliable. The cyber security is weak. The people who should care about the security of information are unreliable. We want to show that nobody cares about the users,” Maze said.

Conclusion

Times of crisis and confusion are a hacker’s delight. The staggering increase of hacks against the healthcare industry only help prove that. The key to mitigating these threats is to ensure that security configurations are set to industry best practices, continuously scan your networks, lock down or close open ports, secure or (preferably) remove Remote Desktop Protocol, and require Multi-Factor authentication for any remote access. And certainly, make sure you are testing your incidence response plan.

Subscribe to our blog here: https://mailchi.mp/90772cbff4db/dpblog