by Doug Kreitzberg | Dec 23, 2020 | Business Continuity, Facebook, Privacy, small business

Ever since Apple announced new privacy features included in the release of OS 14, Facebook has waged a war against the company, arguing that these new features will adversely effect small businesses and their ability to advertise online. What makes these attacks so “laughable” is not just Facebook’s disingenuous posturing as the protector of small businesses, but that their campaign against Apple suggests privacy and business are fundamentally opposed to each other. This is just plain wrong. We’ve said it before and we’ll say is again: Privacy is good for business.

In June, Apple announced that their new mobile operating system, OS 14, would include a feature called “AppTrackingTransparency” that requires apps to seek permission from users before tracking activity between others apps and websites. This feature is a big step towards prioritizing user control of data and the right to privacy. However, in the months following Apple’s announcement, Facebook has waged a campaign against Apple and their new privacy feature. In a blog post earlier this month, Faceboook claims that “Apple’s policy will make it much harder for small businesses to reach their target audience, which will limit their growth and their ability to compete with big companies.”

And Facebook didn’t stop there. They even took out full-page ads in the New York Times, Wall Street Journal and Washington Post to make their point.

Given the fact that Facebook is currently being sued by more than 40 states for antitrust violations, there is some pretty heavy irony in the company’s stance as the protector of small business. Yet, this is only scratches the surface of what Facebook gets wrong in their attacks against Apple’s privacy features.

While targeted online adverting has been heralded as a more effective way for business to reach new audiences and start turning a profit, the groups that benefit the most from these highly-targeted ad practices are in reality gigantic data brokers. In response to Facebook’s attacks, Apple released a letter, saying that “the current data arms race primarily benefits big businesses with big data sets.”

The privacy advocacy non-profit, Electronic Frontier Foundation, reenforced Apple’s point and called Facebook’s claims “laughable.” Start ups and small business, used to be able to support themselves by running ads on their website or app. Now, however, nearly the entire online advertising ecosystem is controlled by companies like Facebook and Google, who not only distribute ads across platforms and services, but also collect, analyze and sell the data gained through these ads. Because these companies have a strangle hold on the market, they also rake in the majority of the profits. A study by The Association of National Advertisers found that publishers only get back between 30 and 40 cents of every dollar spent on ads. The rest, the EFF says, “goes to third-party data brokers [like Facebook and Google] who keep the lights on by exploiting your information, and not to small businesses trying to work within a broken system to reach their customers.”

Because tech giants such as Facebook have overwhelming control on online advertising practices, small businesses that want to run ads have no choice but to use highly-invasive targeting methods that end up benefitting Facebook more than these small businesses. Facebook’s claim that their crusade against Apple’s new privacy features is meant to help small businesses just simply doesn’t hold water. Instead, Facebook has a vested interest in maintaining the idea that privacy and business are fundamentally opposed to one another because that position suits their business model.

At the end of the day, the problem facing small business is not about privacy. The problem is the fundamental imbalance between a handful of gigantic tech companies and everyone else. The move by Apple to ensure all apps are playing by the same rules and protecting the privacy of their users is a good step towards leveling the playing field and thereby actually helping small business grow.

This also shows the potential benefits of a federal, baseline privacy regulation. Currently, U.S. privacy regulations are enacted and enforced on the state level, which, while a step in the right direction, can end up staggering business growth as organizations attempt to navigate various regulations with different levels of requirements. In fact, last year CEOs sent a letter to congress urging the government to put in place federal privacy regulations, saying that “as the regulatory landscape becomes increasingly fragmented and more complex, U.S. innovation and global competitiveness in the digital economy are threatened” and that “innovation thrives under clearly defined and consistently applied rules.”

Lastly, we recently wrote about how consumers are more willing to pay more for services that don’t collect excessive amounts of data on their users.This suggests that surveillance advertising and predatory tracking do not build customers, they build transactions. Apple’s new privacy features open up a space for business to use privacy-by-design principles in their advertising and services, providing a channel for those customers that place a value on their privacy.

Privacy is not bad for business, it’s only bad for business models like Facebook’s. By leveling the playing field and providing a space for new, privacy-minded business models to proliferate, we may start to see more organizations realize that privacy and business are actually quite compatible.

by Doug Kreitzberg | Dec 16, 2020 | Behavior, Cybersecurity, Privacy

In recent years, much has been made of the privacy paradox: the idea that, while people say they value their privacy, their online behaviors show they are more willing to give away personal information than they’d like to think. Tech giants like Facebook and Google have faced a number of highly public privacy standards, yet millions upon millions of users continue to use these services every day. However, what happens when we think of the value of privacy not in terms of how much we want to protect our privacy, but instead in terms of much we are willing to spend to keep our data private. Newly published research does just that and found that, when looking at the dollar value people place on privacy, there might not be as much as a paradox as we suspected, and business can even learn to leverage the market value of privacy to better understand what they should (and shouldn’t) collect from consumers.

The new study, conducted by assistant professor at the London School of Economic Huan Tang, analyzed how much personal information users in China were willing to disclose in exchange for consumer loans. Official credit scores do not exist in China, so consumers typically have to give over a significant amount of personal information in order for banks to assess their credit. By looking at the decisions of 320,000 users on a popular Chinese lending platform, Tang was able to compare user’s willingness to disclose certain pieces of sensitive information against the cost of borrowing.

The results? Tang found that users were willing to disclosure sensitive information in exchange for an average of $33 reduction in loan fees. While for many in the U.S., $33 may not seem all that significant, $33 actually represents 70% of the daily salary in China, showing users place a significantly high value on their privacy. What’s more, on the bank’s side this translates to 10% decrease in revenue when they require users to disclosure additional personal information.

There are a number of important implications of these study for businesses. For one, it suggests, as Tang says, “that maybe there is no ‘privacy paradox’ after all,” meaning consumers’ online behaviors do, in fact, seem to show a value on protecting people’s right to privacy. While today businesses often utilize the data they collect to make money, by collecting everything and anything they can get their hands on, businesses may be losing significant revenue in lost business. According to Tang, collecting more information than necessary turns out to be inefficient. Instead, business can leverage the monetary value users place on their data to be more discerning when deciding what information to collect. If a piece of data is highly valued by consumers and has little direct economic benefits for a company, it may not be worth collecting. Of course, limiting data is a key tenet of Privacy by Design principles, which organization should be applying to our their practices in order to improve their privacy posture vis-a-vis GDPR and other privacy regulations. Limiting data also improves the organization’s cybersecurity posture because it reduces its exposure.

While it may seem counter intuitive in today’s standard practice of collecting as much data as possible, this study shows that limiting the data that is collected can be, according to Tang, a “win-win” for businesses and consumers alike.

by Doug Kreitzberg | Oct 23, 2020 | Google, Privacy

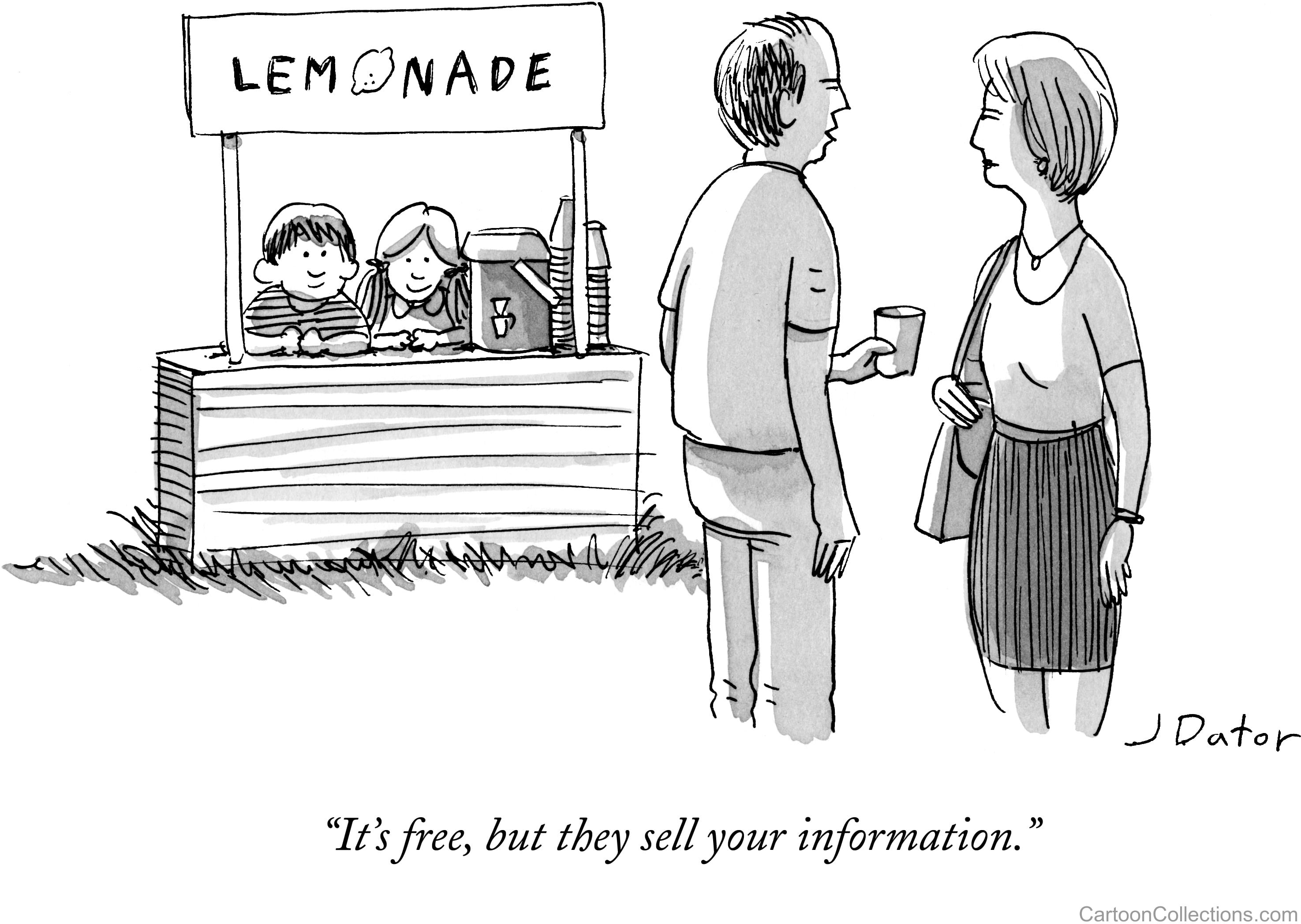

The recently announced anti-trust suit against Google is not about privacy, per se. It is about leveraging monopolistic power to secure a dominant position on mobile devices. One of Google’s claims is that it provides a free service to consumers so there is, in the end, no harm caused by their actions.

In fact, Google is not offering their services for free; they provide us their capabilities in return for our information and our behavioral tendencies. That data is pumped into their algorithms that predicts our behaviors and tendencies and then sold to third parties.

What will be interesting is how much of this will be exposed during the case. Google’s use of data has historically been opaque. It will also be interesting if this case opens more eyes to the importance and value of privacy. Are we perfectly happy giving away our privacy in return for free search, or do we have no other choice because Google has so much dominance it permeates our digital worlds whether we want it or not.

In the end, of course, there is no free lunch (or lemonade). It’s just at what price are we willing to pay?

by Doug Kreitzberg | Apr 29, 2020 | Coronavirus, Privacy

As the COVID-19 pandemic continues, the world has turned to the tech industry to help mitigate the spread of the virus and, eventually, help transition out of lockdown. Earlier this month, Apple and Google announced that they are working together to build contact-tracing technology that will automatically notify users if they have been in proximity to someone who has tested positive for COVID-19. However, reports show that there is a severe lack of evidence to show that these technologies can accurately report infection data. Additionally, the question arises as to the efficacy of these types of apps to effectively assist the marginal populations where the disease seems to have the largest impact. Combined with the invasion of privacy that this involves, the U.S. needs to more seriously interrogate whether or not the potential rewards of app-based contact tracing outweigh the obvious—and potentially long term— risks involved.

First among the concerns is the potential for the information collected to be used to identify and target individuals. For example, in South Korea, some have used the information collected through digital contact tracing to dox and harass infected individuals online. Some experts fear that the collected data could also be used as a surveillance system to restrict people’s movement through monitored quarantine, “effectively subjecting them to home confinement without trial, appeal or any semblance of due process.” Such tactics have already been used in Israel.

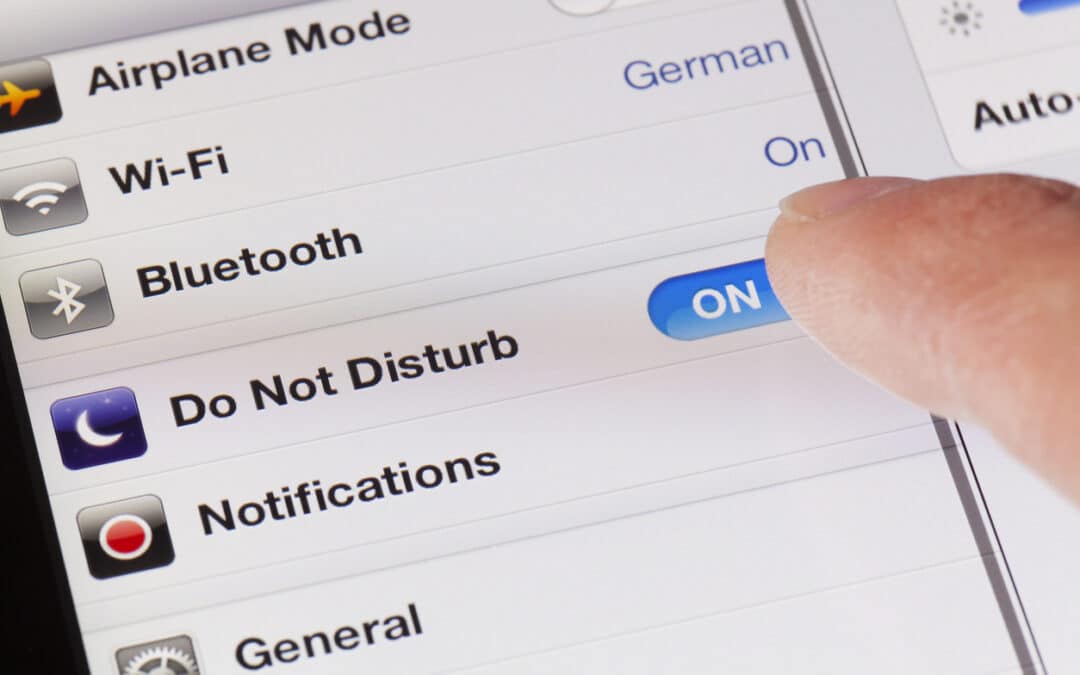

Apple and Google have taken some steps to mitigate the concerns over privacy, claiming they are developing their contact tracing tools with user privacy in mind. According to Apple, the tool will be opt-in, meaning contact tracing is turned off by default on all phones. They have also enhanced their encryption technology to ensure that any information collected by the tool cannot be used to identify users, and promise to dismantle the entire system once the crisis is over.

Risk

Apple and Google are not using the phrase “contact tracing” for their tool, instead branding it as “exposure notification.” However, changing the name to sound less invasive doesn’t do anything to ensure privacy. And despite the steps Apple and Google are taking to make their tool more private, there are still serious short and long term privacy risks involved.

In a letter sent to Apple and Google, Senator Josh Hawley warns that the impact this technology could have on privacy “raises serious concern.” Despite the steps the companies have taken to anonymize the data, Senator Hawley points out that by comparing de-identified data with other data sets, individuals can be re-identified with ease. This could potentially create an “extraordinarily precise mechanism for surveillance.”

Senator Hawley also questions Apple and Google’s commitment to delete the program after the crisis comes to an end. Many privacy experts have echoed these concerns, worrying what impact these expanded surveillance systems will have in the long term. There is plenty of precedent to suggest that relaxing privacy expectations now will change individual rights far into the future. The “temporary” surveillance program enacted after 9/11, for example, is still in effect today and was even renewed last month by the Senate.

Reward?

Contact tracing is often heralded as a successful method to limit the spread of a virus. However, a review published by a UK-based research institute shows that there is simply not enough evidence to be confident in the effectiveness of using technology to conduct contact tracing. The report highlights the technical limitations involved in accurately detecting contact and distance. Because of these limitations, this technology might lead to a high number of false positives and negatives. What’s more, app-based contact tracing is inherently vulnerable to fraud and cyberattack. The report specifically worries about the potential for “people using multiple devices, false reports of infection, [and] denial of service attacks by adversarial actors.”

Technical limitations aside, the effectiveness of digital contact tracing also requires both large compliance rate and a high level of public trust and confidence in this technology. Nothing suggests Apple and Google can guarantee either of these requirements. The lack of evidence showing the effectiveness of digital contact tracing puts into question the use of such technology at the cost serious privacy risks to individuals.

If we want to appropriately engage technology, we should determine the scope of the problem with an eye towards assisting the most vulnerable populations first and at the same time ensure that the perceived outcomes can be obtained in a privacy perserving manner. Governments need to lay out strict plans for oversight and regulation, coupled with independent review. Before comprising individual rights and privacy, the U.S. needs to thoroughly asses the effectiveness of this technology while implementing strict and enforceable safeguards to limit the scope and length of the program. Absent that, any further intrusion into our lives, especially if the technology is not effective, will be irreversible. In this case, the cure may well be worse than the disease.

by Doug Kreitzberg | Apr 10, 2020 | Privacy

There’s been a lot of talk about privacy lately, whether it’s about how social media is tracking and selling your every move online, or video-conferencing privacy breaches, or regulations such as GDPR or CCPA. And now, with COVID-19, there are numerous conversations about the balance between effective mitigation through contract tracing and privacy rights (eg: is it ok for the government to know my health status and track me if I’m positive?).

For Companies — Privacy Builds Trust and Trust Builds Value.

Conversations about privacy are healthy and important. And as a business, those conversations should be starting early in your strategic planning. If you do it right, you can build brand value. If you do wrong, or only do it when pressed by your clients or the press, you have an uphill battle. Just ask Zoom.

Privacy by Design creates the framework for building a brand based on respect

The best thing, therefore, is to get ahead of the curve, and institute a concept called Privacy by Design into your systems and operations planning. Privacy by Design is a set of foundational principles originally developed by the former Privacy Commissioner of Ontario, Ann Cavoukian, and has subsequently been incorporated into the E.U’.s privacy regulations, GDPR.

Privacy as the Default is key

A full review of the Privacy by Design principles are beyond the scope of this blog; they can be reviewed here. One of the principles I would like to review is the concept of Privacy as the Default. As the name implies, this principle states that all aspects of the system and operational workflows assume privacy first. For every piece of personal or sensitive information, we first ask why we need it in the first place. Is it actually crucial to the client’s use of our product or our ability to serve the client?

If we decide we need the data, we should then seek to limit how much and for how long we need to keep the data. And we should be transparent with our clients as to why and how their data will be used and disposed of and to whom and under what conditions it may be shared.

Differentiation in a digital age is harder than ever. Fortunately, you can demonstrate that you respect your clients and improve your brand value by being proactive with regards to privacy.

by Doug Kreitzberg | Apr 8, 2020 | Coronavirus, Privacy

Much has been written about the security and privacy issues with the Zoom videoconferencing application. What may be written more about over the next few months (and in numerous case studies) is how Zoom is responding to those issues.

To begin, the CEO, Eric Yuan, has apologized for Zoom’s prior lack of focus on privacy. Next, his team has stopped all development projects to focus exclusively on security and privacy issues. In addition, he has hired Alex Stamos to be Zoom’s privacy and security advisor as well as has recruited top Chief Security Officers from around the world to serve on an advisory board.

With a user base which has more than doubled since the beginning of the year, Zoom has benefited greatly from the WFH global environment. It is incredible that it has been able to sustain its operability during this growth. But it’s perhaps more impressive that the company, and its CEO in particular, is focusing seriously and aggressively on privacy. This is particularly notable in an era that is unfortunately also fraught with profiteering, scamming and passing the buck.

It hopefully is a wake up call for any company to take it’s privacy issues seriously and to recognize that by doing so, you are not only securing public trust, you are creating brand value.

In 1982, Tylenol responded to its own crisis, when some of its products were tampered leading to poisoning, by pulling every bottle off the shelves and owning the issue. Since then, their response has been a PR crisis case study.

I think Zoom is on its way to becoming a case study as well.