by Doug Kreitzberg | Dec 10, 2021 | Cyber Awareness, Cybersecurity, Data Breach, Human Factor

This Fall, the personal health information of over 170,000 dental patients was exposed in a data breach associated with the Professional Dental Alliance, a network of dental practices affiliated with the North American Dental Group. According to the Professional Dental Alliance, patient information was exposed due to a successful phishing attack against one of their vendors, North American Dental Management. The phishing campaign gave attackers access to some of NADM’s emails, where the personal information of patients were apparently stored.

While the Professional Dental Alliance has said their electronic dental record system and dental images were not accessed, an investigation found that the protected health information of patients such as names, addresses, email addresses, phone numbers, insurance information, Social Security numbers, dental information, and/or financial information were accessed by the attackers.

This is not the first time dental offices have found themselves the target of a data breach. In 2019, a ransomware attack against a managed service provider resulted in the exposure of patient information from over 100 Colorado dental offices. A year later, the information of over 1 million patients was exposed after an attack against the Dental Care Alliance.

These incidents reveal just how vulnerable professionals can be against cybersecurity attacks and data breaches. One of the reasons for this is because many professionals are small businesses who don’t have the time or expertise to deal with everything that goes into cybersecurity. So, many professionals rely on vendors and associations to ensure they are protected. The issue is, if those vendors and associations experience a breach, professionals are also at risk.

To keep their patient information safe, it’s vital that dental offices and all professional businesses pay attention to some of the human risks that can lead to cybersecurity incidents. The attack this week, for instance, was the result of a phishing attack that tricked an employee into handing over account credentials. Here are a few things all professionals can easily do on their own to stay secure:

Endpoint detection and prevention

Endpoint detection and response (EDR) is a type of security software that actively monitors endpoints like phones, laptops, and other devices to identify any activity that could be malicious or threatening. Once a potential threat is identified, EDR will automatically respond by getting rid of or containing the threat and notifying your security or IT team. EDR is vital today to stay on top of potential threats and put a stop to them before they can cause any damage.

Multi-Factor Authentication

Using multi-factor authentication (MFA) is a simple yet powerful tool for stopping the bad guys from using stolen credentials. For example, if an employee is successfully phished and the attack gets that employee’s login information, having MFA in place for that employee’s account can stop the attacker from accessing their account even if they have the right username and password. If possible all users accessing your system should have multi-factor authentication set up for all of their accounts. At minimum, however, it is extremely important that every user with administrative privileges use MFA, whether they are accessing your network remotely or on-premise.

Patching

Hackers are constantly looking for vulnerabilities in the software we rely on to run our businesses. All those software updates may be annoying to deal with, but they often contain important security features that “patch up” known vulnerabilities. At the end of the day, if you’re using out-of-date software, you’re at an increased risk for attack. It’s therefore important that your team stays on top of all software updates as soon as they become available.

Back-ups

Having a backup of your systems could allow you to quickly restore your systems and data in the event of an attack. This is especially important if you are hit by ransomware, in which the attackers remove your data from your networks. However, it’s essential to have an effective backup strategy to ensure the attackers don’t steal your backups along with everything else. At minimum, at least one backup should be stored offsite. You should also utilize different credentials for each copy of your backup. Finally, you should regularly test your back-ups to ensure you will be able to quickly and effectively get your systems online if an attack happens.

Security Awareness Training

As this latest data breach shows, phishing and social engineering attacks are common ways attackers gain access to your systems. Unfortunately, phishing attacks are not something you can fix with a piece of software. Instead, its essential employees are provided with the training they need to spot and report any phish they come across. Sometimes it only takes one wrong click for the bad guys to worm their way in.

by Doug Kreitzberg | Dec 3, 2021 | Behavior, Cyber Awareness, Human Factor

A recent article in The Wall Street Journal highlights some of the big changes that businesses have made to their employee training programs since the start of the pandemic. Typically, these trainings are formal, multi-hour in-person meetings. According to Katy Tynan, research analyst at Forrester Research, “formal, classroom-delivered training was easy to plan and deliver, but organizations didn’t always see the intended results.” Once the pandemic came along, trainings moved online and offered fun, informal bitesize trainings that employees take overtime. These changes to classical training programs echo many of the behavior-design principles that we incorporate into our cybersecurity awareness training.

Let’s break down some of the key changes the Journal article discusses and how they related to behavior-design principles:

1. Keep it Simple

Instead of hours-long trainings, businesses are starting to break down their trainings into small pieces for employees. In behavior-design terms, this represents an important element towards creating change: making sure users can easily do what we are asking them to do. Simply put, you can’t throw a ton of information at someone and expect them to keep up with it all. What’s more, employees will be a lot more willing to go through with a training if they know it will only take 5 minutes instead of 5 hours. Keeping trainings short and easy to do are therefore important steps towards ensuring that your desired outcome aligns with your employees’ abilities.

2. Consistency is key

Most traditional training programs are a one-and-done deal. Once it’s over, you never have to worry about it again. However, this is exactly what we don’t want employees to take away from training. Instead, consistency is key for any changes. With short lessons, employees can go through the program in small, daily steps that are easy to manage while also keeping the training in their mind over an extended period of time.

3. Make it Interesting

The final piece of the behavioral puzzle is ensuring that employees actually want to do the trainings. Most traditional training programs may involve some small group discussions, but overall employees are shown videos and made to listen to someone talk at them for long periods of time. Employees are only taking in information passively. Instead, trainings should be fun, interesting, and engaging to keep users coming back for more.

The pandemic has brought about so many changes to our lives. While some of the changes have been for the worse, it’s also forced us to start thinking differently about how we do things and come up with creative solutions. The new trend in training programs is one such change. And what makes these changes so successful is the way it incorporates some of the basic behavior-design principles. This is an approach we’ve taken when we developed The PhishMarket™, our cyber awareness training program. By offering engaging and interactive 2-4 minute lessons given daily over an extended period of time, our program has shown success in reducing employee phish susceptibility 50% more than the industry standard.

by Doug Kreitzberg | Oct 26, 2021 | Cyber Awareness, Human Factor

In many cases, our employees are our first line of defense against cyber-attack. However, for employees to start developing habits that are in line with cybersecurity practices, it’s essential business leaders need to understand effective strategies for getting these habits to stick. One of the main tenants of behavioral science is that the new habit you want to see needs to be easy to accomplish.

Ideally, you and your IT team can put in place effective cybersecurity controls that make developing secure habits easier for your employees. But what happens when these security features make it more difficult for users to perform the positive and secure behaviors you want to see?

This is the topic of new research on cybersecurity risk management and behavior design. In “Refining the Blunt Instruments of Cybersecurity: A Framework to Coordinate Prevention and Preservation of Behaviors,” researchers Simon Parkin and Yi Ting Chua highlight the importance of making sure that cybersecurity controls that limit malicious or negative behaviors don’t also restrict the positive behaviors your employees are trying to accomplish. For example, it’s common practice for companies to require their employees to change their passwords every few months. However, not only does this put the burden on employees for keeping their accounts secure, research has shown that users who are required to create new passwords frequently tend to use less and less secure passwords over time. While you may think having employees change their passwords will help keep your network more secure, doing so might actually have the opposite effect.

To ensure security controls aren’t restricting users from engaging in positive behaviors, Parkin and Chua emphasize the need to more precisely target malicious behaviors. To do so, they outline three steps business leaders and IT teams should take to more precisely define their cybersecurity controls.

1. Create a system to identify positive behaviors

To ensure you are preserving the positive behaviors your employees are doing, you first have to figure out how to track those behaviors. Unfortunately, it can be a lot easier to identify behaviors you don’t want to see, than those you do want to see. An employee clicking a malicious link in an email address, for example, can be identified. But, how do you identify when an employee doesn’t click the link in a phishing email? One solution is to give users access to a phish reporting button direct within their email client.

Whatever you decide, it’s essential to both identify the positive behaviors you want to see and create a system to track when those behaviors are used by employees.

2. Find linkages between negative and positive behaviors

Now that you can track both positive and negative behaviors, the next step is to look at your security controls and identify possible linkages between the negative behavior the control is defined to restrict and positive behaviors you want employees to engage in. If a control affects both positive and negative behaviors, there is a linkage the control is creating — a linkage you want to break.

3. Better define controls to prevent negative behaviors and promote positive behaviors.

Once you’ve identified linkages between positive and negative behaviors, the next step is to find ways to ensure your controls are only affecting the negative behaviors. For example, instead of requiring users to create new passwords every few months, system monitoring tools can be used to detect suspicious activity and block access to a user’s account without the user having to do anything.

At the end of the day, if the habits you want your employees to form aren’t easy to accomplish, it’s not going to happen. And it’s definitely not going to happen if your security controls are actively making things harder for your employees. It’s essential for you and your IT team to take the time to review your current controls and actively identify ways to maintain your security without affecting your employee’s ability to form secure habits at work.

by Doug Kreitzberg | Mar 26, 2021 | Coronavirus, Cyber Awareness, Misinformation

We’ve written before about how the disruption and confusion of the COVID-19 pandemic has caused an uptick in phishing and disinformation campaigns. Yet, there is another dimension to this that is just beginning to become clear: how the isolation of remote work helps to create the conditions necessary for disinformation to take root.

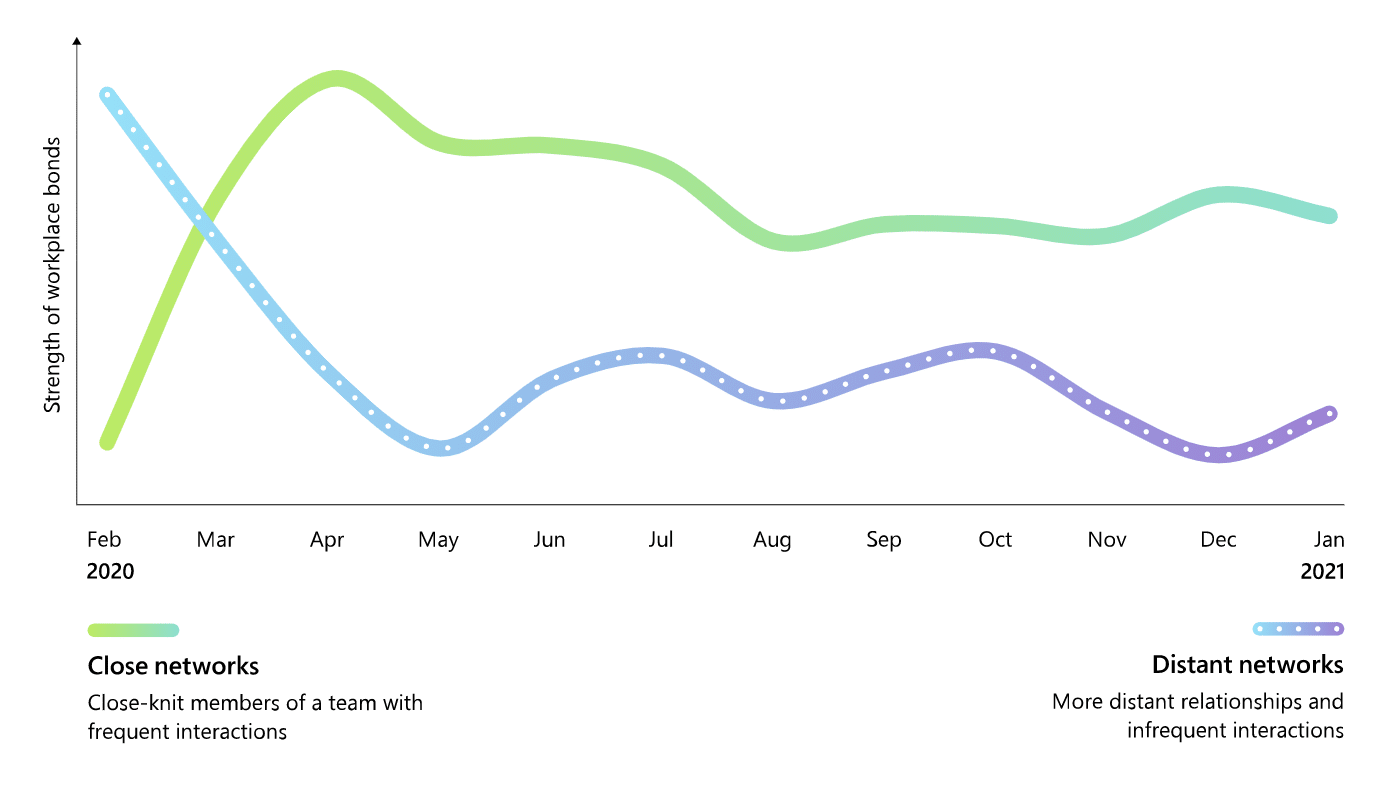

In a report on the impacts of remote and hybrid work on employees, Microsoft highlights how remote work has shrunk our networks. Despite the ability to use video services like Zoom and Microsoft Teams to collaborate with others across the globe, the data reveals that remote work has actually caused us to consolidate our interactions to just those we work closely with, and far less with our extend networks. The result is that employees and teams have become siloed, creating a sort of echo chamber in which new and diverse perspectives are lost. According to Dr. Nancy Baym, Senior Principal Researcher at Microsoft, when are networks shrink, “it’s harder for new ideas to get in and groupthink becomes a serious possibility.”

The gap between interactions with our close network and our distant network created by remote work doesn’t just stifle innovation, it’s also what creates the conditions necessary for disinformation to thrive. When we are only exposed to information and perspectives that are familiar to us, it becomes harder and harder to question what we are being presented. If, for example, we are in a network of people who all believe Elvis is still alive, without exposure to other people who think Elvis in fact isn’t alive we would probably just assume there isn’t any reason to question what those around us are telling us.

The point is, without actively immersing ourselves within networks with differing perspectives, it becomes difficult to exercise our critical thinking abilities and make informed decisions about the validity of the information we are seeing. Remote and hybrid work is likely going to stick around long after the pandemic is over, but that doesn’t mean there aren’t steps we can take to ensure we don’t remained siloed within our shrunken networks. In order to combat disinformation within these shrunken networks we can:

1. Play the Contrarian

When being presented with new information, one of the most important ways to ensure we don’t blindly accept something that may not be true is to play the contrarian and take up the opposite point of view. You may ultimately find that the opposite perspective doesn’t make sense, but will help you take a step back from what you are being shown and give you the chance to recognize there may be more to the story than what you are seeing.

2. Engage Others

It may seem obvious, but engaging with opinions and perspectives that are different than what we are accustomed to is essential to breaking free of the type of groupthink that disinformation thrives on. It can also be a lot harder than it sounds. The online media ecosystem isn’t designed to show you a wide range of perspectives. Instead, it’s up to us to take the time to research other points of view and actively seek out others who see things differently.

3. Do a Stress Test

Once you have a better sense of the diversity of perspectives on any given topic, you’re now in a position to use your own critical thinking skills to evaluate what you — and not those around you — think is true. Taking in all sides of an issue, you can then apply a stress test in which you try to disprove each point of view. Which ever perspective seems to hold up the best or is hardest to challenge will give you a good base to make an informed decision about what you think is most legitimate.

From our personal lives to the office, searching for opposite and conflicting perspectives will help build resilience against the effects of disinformation. It can also even help to be more effective at spotting phish and social media campaigns. By looking past the tactics designed to trick us into clicking on a link or giving away information, and taking a few seconds to take a breathe, examine what we are looking at, and stress test the information we are being shown, we can be a lot more confident in our ability to tell the difference between phish and phriend.

by Doug Kreitzberg | Sep 9, 2020 | Cyber Awareness, GDPR, ransomware

In July, we wrote about a ransomware attack suffered by the cloud computing provider Blackbaud that led to the potential exposure of personal information entrusted to Blackbaud by hundreds of non-profits, health care organizations, and educational institutions. At the time the ransomware attack was announced, security experts questioned Blackbaud’s response to the breach. Now, the Blackbaud ransomware attack isn’t just raising eyebrows, with the company facing a class action lawsuit for their handling of the attack.

Blackbaud was initially attacked on February 7th of this year. However, according to the company, they did not discover the issue until mid-May. While the time it took the company to detect the intrusion was long, it is increasingly common for threats to go undetected for long periods of time. What really gave security experts pause is how Blackbaud responded to the incident after detecting it.

The company was able to block the hacker’s access to their networks, but attempts to regain control continued until June 3rd. The problem, however, was that the hackers had already stolen data sets from Blackbaud and demanded a bitcoin payment before destroying the information. Blackbaud remained in communication with the the attackers until at least June 18th, when the company payed the ransom. Of course, many experts questioned Blackbaud’s decision to pay given that there is no way to guarantee the attackers kept their word. And, to make matters worse, the company did not public announce the incident to the hundreds of non-profits that use their service until July 16th — nearly two months after initially discovering the incident.

Each aspect of Blackbaud’s response to the ransomware attack is now a part of a class action lawsuit filed against the company by a U.S. resident on August 12th. The main argument of the lawsuit claims that Blackbaud did not have sufficient safeguards in place to protect the private information that the company “managed, maintained, and secured,” and that Blackbaud should cover the costs of credit and identity theft monitoring for those affected. The lawsuit also alleges that Blackbaud failed to provide “timely and adequate notice” of the incident. Finally, regarding Blackbaud’s payment of the ransomware demand, the lawsuit argues that the company “cannot reasonably rely on the word of data thieves or ‘certificate of destruction’ issued by those same thieves, that the copied subset of any Private Information was destroyed.”

Despite the agreement among privacy experts that Blackbaud’s response to the attack was anything but perfect, lawsuits pertaining to data breaches have historically had a low success rate in the U.S.. According to an attorney involved in the case, showing harm requires proving a financial loss rather than relying on the more abstract harm caused by a breach of privacy: “The fact that we don’t assign a dollar value to privacy [means] we don’t value privacy.”

Whatever the result of the lawsuit, questions still persist on whether Blackbaud’s response violates the E.U.’s General Data Protection Regulation. The GDPR requires organizations to submit notification of a breach within 72 of discovery. Because many of Blackbaud’s clients are UK-based and the company took months to notify those affected, it is possible Blackbaud could recevie hefty fines for their response to the attack. A spokesperson for the UK’s Information Commissioner’s Office told the BBC that the office is making enquiries into the incident.

As for the non-profits, healthcare organizations, and educational institutes that were affected by the breach? They have had to scramble to submit notifications to their donors and stakeholders that their data may have been compromised. Non-profits in particular rely on their reputations to keep donations coming in. While these organizations were not directly responsible for the breach, this incident highlights the need to carefully review third-party vendors’ security policy and to create a written security agreement with all vendors before using those services.

by Doug Kreitzberg | Aug 31, 2020 | Cyber Awareness

When it comes to cybersecurity training, it’s easy to focus your energy on employees who may not have an advanced understanding of your network and technological systems. And, of course, it is vitally important these employees understand the basics of cybersecurity and adopt behaviors that help protect your systems from compromise. However, implicit in this way of thinking is that your IT staff, who are incredibly knowledgable about your systems, don’t need to be trained in cybersecurity. This couldn’t be further from the truth.

While your IT team is likely more aware of existing cyber threats, they also likely have administrative access across your entire network, making their account far more costly if compromised. What’s more, IBM’s 2020 Cost of a Data Breach Report found that 19% of all malicious attacks are initially caused by cloud misconfigurations, which is generally the responsibility of IT staff.

It is therefore vitally important to ensure your IT team is receiving role-specific cybersecurity training alongside the rest of your staff. This training should still cover a lot of the basics that every employee needs to know, but it should also include more specific and in-depth training in topics such as cloud configurations, access management and monitoring, network segmentation, and vulnerability scans. Your entire IT staff doesn’t need to be security experts, but everyone should have a good understanding of the current threat landscape and know how to spot any suspicious activity within your systems.

And, like all cybersecurity training, it’s important to use a program that focuses on helping your team build better, more secure habits, rather than simply throwing information at them. An important aspect of this is to simply make it easier for your IT team to do the job they need to do and do it securely. Because IT departments have highly specialized knowledge, it can be easy for business leadership to simply leave them to do what they think makes sense. However, executives should be actively involved in giving immediate feedback and listening to what IT staff need to make sure they can do their jobs efficiently and safely.