by Doug Kreitzberg | Dec 9, 2020 | Business Email Compromise, Data Breach, Disinformation, Human Factor, Phishing

Yesterday, I received an email from a business acquaintance that included an invoice. I knew this person and his business but did not recall him every doing anything for me that would necessitate a payment. I called him to about the email and he said that his account had been indeed hacked and those emails were not from him. What occurred was an example of business email compromise (BEC) using stolen credentials.

Typically, BEC is a form of cyber attack where attackers create fake emails that impersonate executives in order to convince employees to send money to a bank account controlled by the bad guys. According to the FBI, BEC is the costliest form of cyber attack, scamming business out of $1.7 billion in 2019 alone. One reason these attacks are becoming so successful is because attackers are upping their game: instead of creating fake email address that look like a CEO or a vendor, attackers are now learning to steal login info to make their scams that much more convincing.

By compromising credentials, BEC attackers have opened up multiple new avenues to carry out their attack and increase the change of success. Among all the ways compromised credentials can be used for BEC attacks, here are 3 that every business should know about.

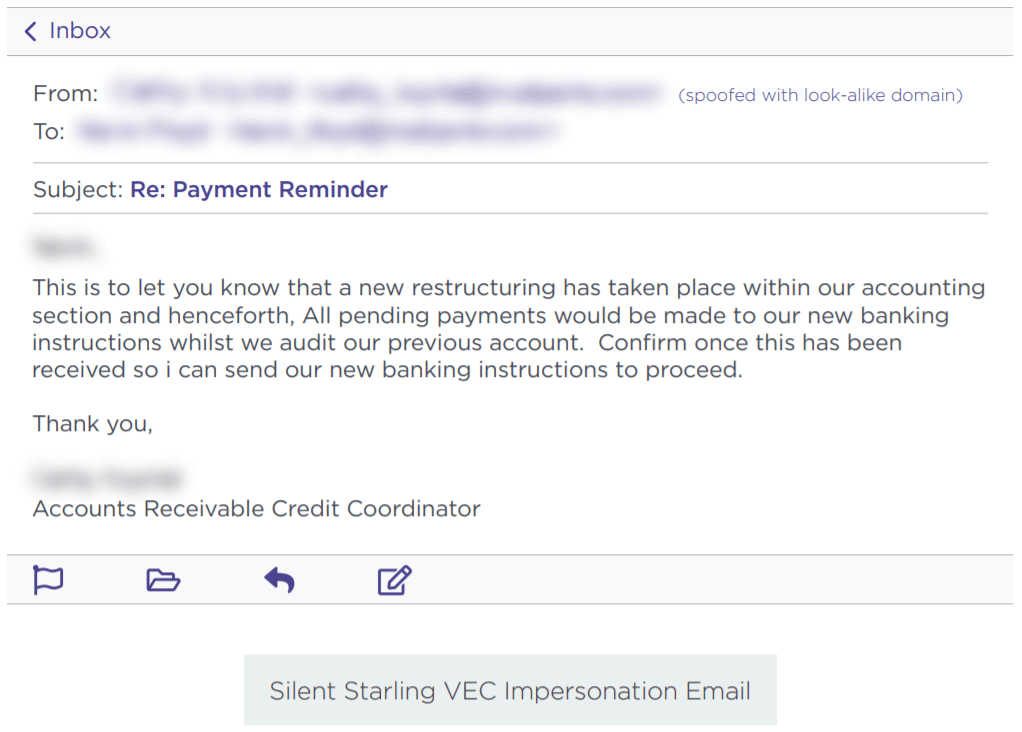

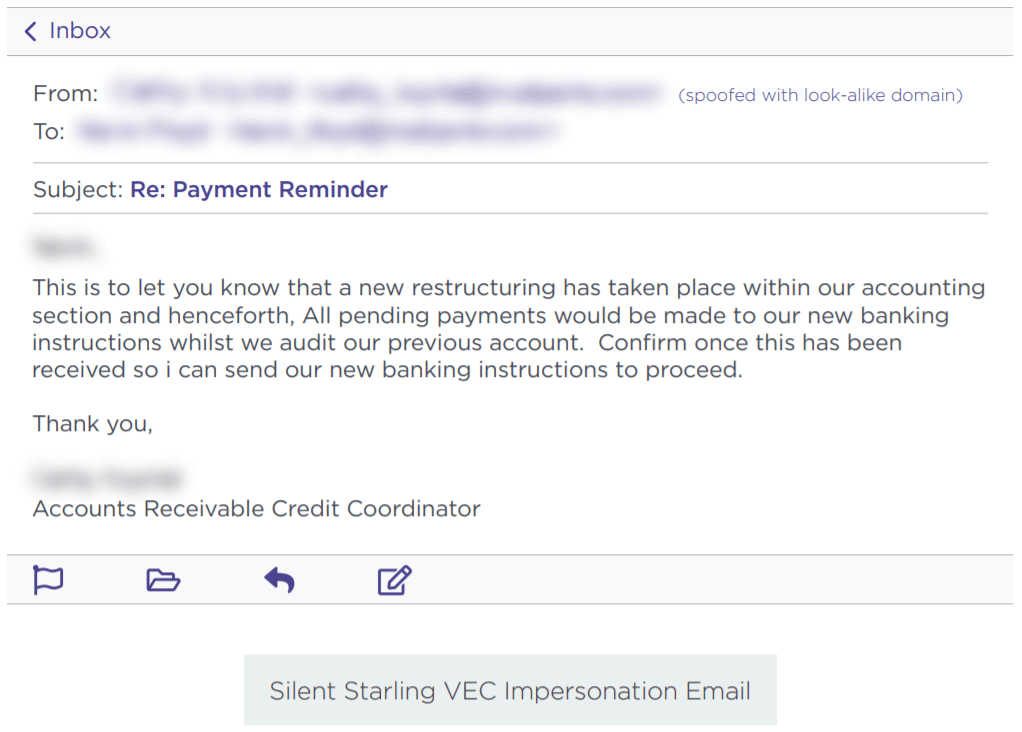

Vendor Email Compromise

One way BEC attackers can use compromised credentials has been called vendor email compromise. The name, however, is a little misleading, because vendors aren’t actually the target of the attack. Instead, they are the means to carry an attack out on a business. Essentially, BEC attackers will compromise the email credentials of an employee at the billing department of a vendor, then send invoices from that email to businesses requesting they make payment to a bank account controlled by the attackers.

Source: Agari

Inside Jobs

Another way attackers can use compromised credentials to carry out BEC scams is to use the credentials of someone in the finance or accounting department of an organizations to make payment requests to other employees and suppliers. By using the actual email of someone within the company, payments requests look far more legitimate and increase the change that the scam will succeed.

What’s more, attackers can use compromised credentials of someone in the billing department to even target customers for payment. Of course, if the customers make a payment, it goes to the attackers and not to the company they think they are paying. This is a new method of BEC, but one that is gaining steam. In a press release earlier this year, the FBI warned of the use of compromised credentials in BEC to target customers.

Advanced Intel Gathering

Another method to use compromised credentials for BEC doesn’t even involve using the compromised account to request payments. Instead, attackers will gain access to the email account of an employee in the finance department and simply gather information. With enough time, attackers can study who the business releases funds to, how often, and what the payment requests look like. With all of this information under their belt, attackers will then create a near-perfect impersonation of the entity requesting payment and send the request exactly when the business is expecting it.

Attackers have even figured out a way to retain access to employee’s emails after they’ve been locked out of the account. Once they’ve gained access to an employee’s inbox, attackers will often set the account to auto-forward any emails the employee receives to an account controlled by the attacker. That way, if the employee changes their password, the attacker can still view every message the employee receives.

What you can do

All three of these emerging attack methods attack should make businesses realize that BEC is a real and dangerous threat. It can be far harder to detect a BEC attack when the attackers are sending emails from a real address or using insider information from compromised credentials to expertly impersonate a vendor. Attackers can gain access to these credentials in a number of ways. First, through initial phishing attacks designed to capture employee credentials. Earlier this year, for example, attackers launched a spear phishing campaign to gather the credentials of finance executives‘ Microsoft 365 accounts in order to then carry out a BEC attack. Attackers can also pay for credentials on the dark web that were stolen in past data breaches. Even though these breaches often involve credentials of employees’ personal accounts, if an employee uses the same login info for every account, then attackers will have easy access to carry out their next BEC scam.

While the use of compromised credentials can make BEC harder to detect, there are a number of things organizations can do to protect themselves. First, businesses should ensure all employees—and vendors!—are properly trained in spotting and identifying phishing attacks. Second, organizations should require proper password management is for all users. Employees should use different credentials for every account, and multi-factor authentication should be enabled for vulnerable accounts such as email. Lastly, organization should disable or limit the auto-forwarding to prevent attackers from continuing to capture emails received by a targeted employee.

Businesses should also ensure employees in the finance department receive additional BEC training. A report earlier this year found an 87% increase in BEC attacks targeting employees in finance departments. Ensuring employees in the finance department know, for example, to confirm any changes to a vendor’s bank information before releasing funds, is key to protecting your organization from falling prey to the increasingly sophisticated BEC landscape.

by Doug Kreitzberg | Nov 19, 2020 | Artificial Intelligence, Coronavirus, Disinformation, Human Factor, Misinformation, Reputation, social engineering, Social Media

By now, most everyone has heard about the threat of misinformation within our political system. At this point, fake news is old news. However, this doesn’t mean the threat is any less dangerous. In fact, over the last few years misinformation has spread beyond the political world and into the private sector. From a fake news story claiming that Coca-Cola was recalling Dasani water because of a deadly parasite in the bottles, to false reports that an Xbox killed a teenager, more and more businesses are facing online misinformation about their brands, damaging the reputations and financial stability of their organizations. While businesses may not think to take misinformation attacks into account when evaluating the cyber threat landscape, it’s more and more clear misinformation should be a primary concern for organizations. Just as businesses are beginning to understand the importance of being cyber-resilient, organizations need to also have policies in place to stay misinformation-resilient. This means organization need to start taking both a proactive and a reactive stance towards future misinformation attacks.

Perhaps the method of disinformation we are all most familiar with is the use of social media to quickly spread false or sensationalized information about a person or brand. However, there are a number of different guises disinformation can take. Fraudulent domains, for example, can be used to impersonate companies in order to misrepresent brands. Attackers also create copy cat sites that look like your website, but actually contain malware that visitors download when the visit the site. Inside personnel can weaponize digital tools to settle scores or hurt the company’s reputation — the water-cooler rumor mill now can now play out in very public and spreadable online spaces. And finally, attackers can create doctored videos called deep fakes that can create convincing videos of public figures saying things on camera they never actually said. You’ve probably seen deepfakes of politicians like Barak Obama or Nancy Pelosi, but these videos can also be used to impersonate business leadership that are shared online or circulated among staff.

With all of the different ways misinformation attacks can be used against businesses, its clear organizations need to be prepared to stay resilient in the face of any misinformation that appears. Here are 5 steps all organizations should take to build and maintain a misinformation-resilient business:

1. Monitor Social Media and Domains

Employees across various departments of your organization should be constantly keeping their ear to the ground by closely monitoring for any strange or unusual activity by and about your brand. Your marketing and social media team should be regularly keeping an eye on any chatter online about the brand and evaluate the veracity of claims being made, where they originate, and how widespread is the information is being shared.

At the same time, your IT department should be continuously looking for new domains that mention or closely resemble your brand. It’s common for scammers to create domains that impersonate brands in order to spread false information, phish for private information, or just seed confusion. The frequency of domain spoofing has sky-rocketed this year, as bad actors take advantage of the panic and confusion surrounding the COVID-19 pandemic. When it comes to spotting deepfakes, your IT team should invest in software that can detect whether images and recordings have been altered

Across all departments, your organization needs to keep an eye out for any potential misinformation attacks. Departments also need to be in regular communication with each other and with business leadership to evaluate the scope and severity of threats as soon as they appear.

2. Know When You Are Most Vulnerable

Often, scammers behind misinformation attacks are opportunists. They look for big news stories, moments of transition, or when investors will be keep a close eye on an organization in order to create attacks with the biggest impact. Broadcom’s shares plummeted after a fake memorandum from the US Department of Defense claimed an acquisition the company was about to make posed a threat to national security. Organization’s need to stay vigilant for moments that scammer can take advantage of, and prepare a response to any potential attack that could arise.

3. Create and Test a Response Plan

We’ve talked a lot about the importance of having a cybersecurity incident response plan, and the same rule is true for responding to misinformation. Just as with a cybersecurity attack, you shouldn’t wait to figure out a response until after attack has happened. Instead, organizations need to form a team from various levels within the company and create a detailed plan of how to respond to a misinformation campaign before it actually happens. Teams should know what resources will be needed to respond, who internally and externally needs to be notified of the incident, and which team members will respond to which aspect of the incident.

It’s also important to not just create a plan, but to test it as well. Running periodic simulations of a disinformation attack will not only help your team practice their response, but can also show you what areas of the response aren’t working, what wasn’t considered in the initial plan, and what needs to change to make sure your organization’s response runs like clock work when a real attack hits. Depending on the organization, it may make sense to include disinformation attacks within the cybersecurity response plan or to create a new plan and team specifically for disinformation.

4. Train Your Employees

Employees throughout the organizations should also be trained to understand the risks disinformation can pose to the business, and how to effectively spot and report any instances they may come across. Employees need to learn how to question images and videos they see, just as they should be wary links in an email They should be trained on how to quickly respond internally to disinformation originated from other insiders like disgruntled employees, and key personnel need to be trained on how to quickly respond to disinformation in the broader digital space.

5. Act Fast

Putting all of the above steps in place will enable organizations to take swift action again disinformation campaigns. Fake news spreads fast, so an organizations need to act just as quickly. From putting your response plan in motion, to communicating with your social media follow and stake-holders, to contacting social media platforms to have the disinformation content removed all need to happen quickly for your organization to stay ahead of the attack.

It may make sense to think of cybersecurity and misinformation as two completely separate issues, but more and more businesses are finding out that the two are closely intertwined. Phishing attacks rely on disinformation tactics, and fake news uses technical sophistications to make their content more convincing and harder to detect. In order to stay resilient to misinformation, businesses need to incorporate these issues into larger conversations about cybersecurity across all levels and departments of the organization. Preparing now and having a response plan in place can make all the difference in maintaining your business’s reputation when false information about your brand starts making the rounds online.

by Doug Kreitzberg | Aug 19, 2020 | Artificial Intelligence, Disinformation, Human Factor, Phishing, social engineering

Earlier this month, a study by the University College London identified the top 20 security issues and crimes likely to be carried out with the use of artificial intelligence in the near future. Experts then ranked the list of future AI crimes by the potential risk associated with each crime. While some of the crimes are what you might expect to see in a movie — such as autonomous drone attacks or using driverless cars as a weapon — it turns out 4 out of the 6 crimes that are of highest concern are less glamorous, and instead focused on exploiting human vulnerabilities and bias’.

Here are the top 4 human-factored AI threats:

Deepfakes

The ability for AI to fabricate visual and audio evidence, commonly called deepfakes, is the overall most concerning threat. The study warns that the use of deepfakes will “exploit people’s implicit trust in these media.” The concern is not only related to the use of AI to impersonate public figures, but also the ability to use deepfakes to trick individuals into transferring funds or handing over access to secure systems or sensitive information.

Scalable Spear-Phishing

Other high-risk, human-factored AI threats include scalable spear-phishing attacks. At the moment, phishing emails targeting specific individuals requires time and energy to learn the victims interests and habits. However, AI can expedite this process by rapidly pulling information from social media or impersonating trusted third parties. AI can therefore make spear-phishing more likely to succeed and far easier to deploy on a mass scale.

Mass Blackmail

Similarly, the study warns that AI can be used to harvest a mass information about individuals, identify those most vulnerable to blackmail, then send tailor-crafted threats to each victim. These large-scale blackmail schemes can also use deepfake technology to create fake evidence against those being blackmailed.

Disinformation

Lastly, the study highlights the risk of using AI to author highly convincing disinformation and fake news. Experts warn that AI will be able to learn what type of content will have the highest impact, and generate different versions of one article to be publish by variety of (fake) sources. This tactic can help disinformation spread even faster and make the it seem more believable. Disinformation has already been used to manipulate political events such as elections, and experts fear the scale and believability of AI-generated fake news will only increase the impact disinformation will have in the future.

The results of the study underscore the need to develop systems to identify AI-generated images and communications. However, that might not be enough. According to the study, when it comes to spotting deepfakes, “[c]hanges in citizen behaviour might [ ] be the only effective defence.” With the majority of the highest risk crimes being human-factored threats, focusing on our own ability to understand ourselves and developing behaviors that give us the space to reflect before we react may therefore become to most important tool we have against these threats.

by Doug Kreitzberg | Jun 22, 2020 | Cybersecurity, Disinformation, Phishing

In 1989 the U.S. Postal Service issued new stamps that featured four different kinds of dinosaurs. While the stamps look innocent enough, their release was the source of controversy among paleontologists, and even serves as an example of how misinformation works by making something false appear to be true.

The controversy revolves around the inclusion of the brontosaurus, which, according to scientists at that time, never existed. In 1874, paleontologist O.C. Marsh discovered the bones of what he thought was a new species of dinosaur. He called it the brontosaurus. However, as more scientists discovered similar fossils, they realized that what Marsh had found was in fact a species previous identified as an apatosaurus, which, ironically, is Greek for “deceptive lizard.” Paleontologists were therefore rightly upset to see the brontosaurus included on a stamp with real dinosaurs.

Over 30 year later, however, these stamps may have something to teach us about how disinformation works today. They show how disinformation is not simply about falsehoods — it’s about how those falsehoods are presented so as to seem true.

The stamps help illustrate this in three ways:

1) Authority

One of the ways something can appear to be true is when the information comes from a figure of authority. Because the stamps were officially released by the U.S. government, it gives the information contained on them the appearance of truth. Of course, no one would think the USPS is an authority on dinosaurs, and yet the very position of authority the postal service occupies seems to serve as a guarantee of the truth of what is presented. The appearance of authority, however wrongly placed it is, is often enough for us to believe something to be true.

This is a tactic used by scammers all the time. It’s the reason why you’ve probably gotten a lot of robocalls claiming to be the IRS. Phishing emails also use this tactic by spoofing the ‘from’ field and using logos of businesses and government agencies. We too often assume that, just because information appears to be coming from an authority, it must be true.

2) Truths and a Lie

Another way something false can appears true is by placing what is fake among things that are actually true. The fact that the other stamps in the collection — the tyrannosaurus, the stegosaurus, and the pteranodon — are real gives the brontosaurus the appearance of truth. By placing one piece of false information alongside recognizably true information, that piece of false information starts to look more and more like a truth.

Fake news on social media uses this tactic all the time. Phishing attacks also take advantage of this by replicating certain aspects of legitimate emails. This might include mentioning information in the news, such as COVID-19, or even including things like an unsubscribe link at the end of the email. This tactic works by using legitimate information and elements in an email to cover up what is fake.

3) Anchoring

The US Postal Service did not invent the brontosaurus: in fact, the American Museum of Natural History named a skeleton brontosaurus in 1905. Once a claim is stated as truth, it becomes very hard to dislodge. This was actually the reasoning the US Postal Service used when they were challenged: “Although now recognized by the scientific community as Apatosaurus, the name Brontosaurus was used for the stamp because it is more familiar to the general population.” Anchoring is a key aspect of disinformation, especially with regards to persistency.

Beyond Appearances

Overall, what the brontosaurus stamp shows us is that our ability to discern the true from the false largely depends on how information is presented to us. Scammers and phishers have understood this for a long time. The first step in critically engaging with information online is therefore to recognize that just because something appears true does not, in fact, make it true. Given the continued rise of disinformation, this is a lesson that is more important now than ever. In fact, it is unlikely disinformation will ever become extinct.